“One surprising source of insight into the question of why our minds work the way they do comes from the attempt to replicate them – the study of artificial intelligence.” — Jochen Szangolies, PhD

Editor: Jochen Szangolies, PhD — who works on quantum contextuality and mechanics — wrestles brilliantly with the concept of Buddha Nature as it relates not only to mind — but also to Artificial Intelligence. As we try to emulate the human mind, and effectively push AI towards consciousness, does this mean the “machine” develops Buddha Nature? As a “test” of AI, here’s an image on the theme of AI Buddha Nature — created by AI:

By Jochen Szangolies, PhD

Biography at bottom of feature. Profile on Academia.edu>>

Buddhist philosophy sees us as systematically mistaken about both ourselves and the world, and views suffering (Sanskrit: duḥkha, Pāli: dukkha) as a direct consequence of this misperception or ignorance (Sanskrit: avidyā, Pāli: avijjā). It would be futile to try and give an account of Buddhist doctrine here that has any pretensions towards completeness, but the general gist is not too far from the following: We are mistaken about the sort of creatures we are, and likewise, about the world we inhabit. Concretely, we believe that the world around us has certain immutable and absolute characteristics, and that we, ourselves, possess a certain fixed core or true nature called the ‘self’ – but in fact, these are categorically mistaken, even inconsistent, notions, which beget desires we can only pursue in vain.

The idea that some of our most deeply and intuitively held beliefs about ourselves and the world we inhabit should simply be mistaken is challenging, and upon first encounter, may appear downright offensive. What could be the reason for this deception? Should we not expect that evolution, if nothing else, has tuned our faculties towards faithfully representing ourselves within our environment, maximizing our chances of successfully interacting with it?

In this essay, I want to suggest that one surprising source of insight into the question of why our minds work the way they do comes from the attempt to replicate them – the study of artificial intelligence.

Another image generated by AI:

1 What AI’s Errors Teach Us

Artificial intelligence based on neural networks has been, by most accounts, a massive success story. AI superlatives have dominated headlines in the past few years. In 2016, world Go champion Lee Sedol was defeated by Deep Mind’s (now Google) Alpha Go. [Ed: Video inset below.] More recently, Elon Musk-backed nonprofit OpenAI pronounced its text generator GPT2 “too dangerous to release”. Philipp Wang, a software engineer at Uber, used research by Nvidia to create one of 2019’s greatest viral hits so far, a site that does nothing but display a new, AI-generated human face each time it is loaded.

It is not hard to see, in this age of fake news and alternative facts, that such feats may be cause for concern. However, the greater challenge posed by neural network based AI may not lie with its successes, but rather, with its failures – and concretely, with its inability to justify itself. When a neural network fails at its task, it often does so in a bizarre fashion.

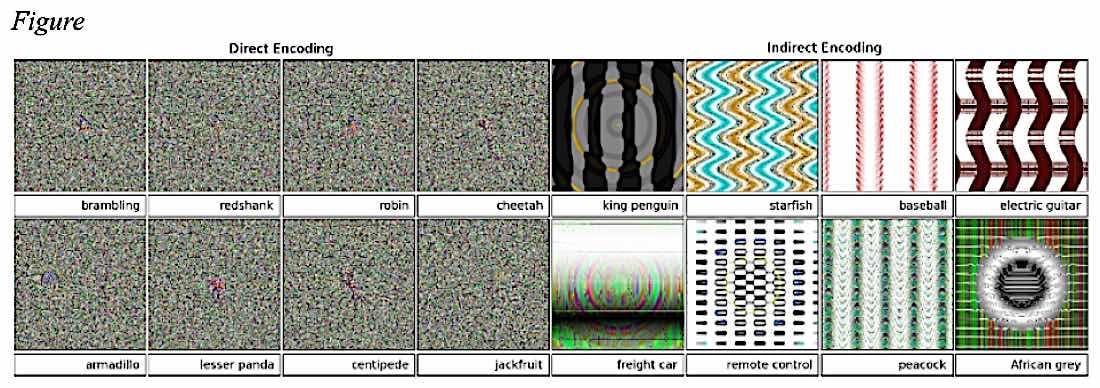

This can have intriguing effects: Research performed at the University of Wyoming shows how to deliberately create images designed to fool successful image classifiers, for instance goading them to confidently identify what looks like random noise to human eyes as various animals (see Figure).

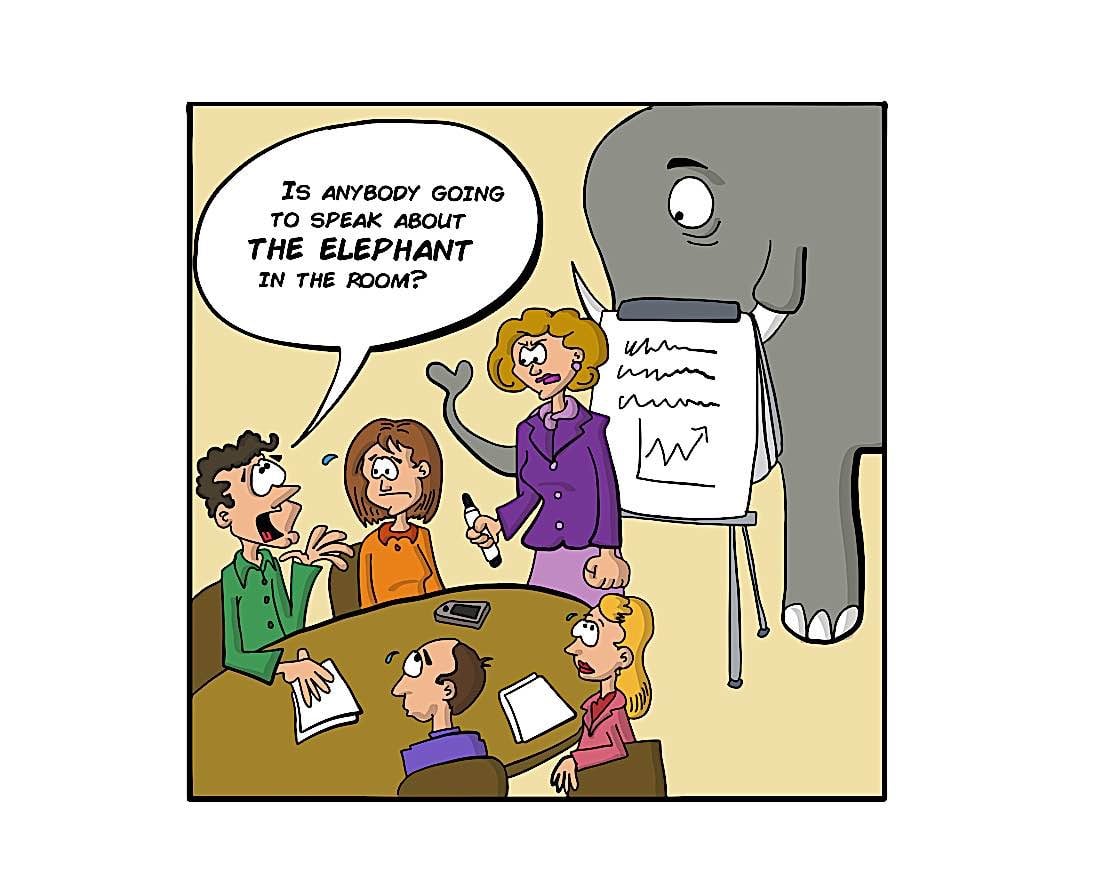

In another study, researchers confronted AI systems with the proverbial elephant in the room: Into a living room scene containing many objects easily recognized by a computer vision system, an elephant is introduced. This has surprising effects: Sometimes, the elephant isn’t recognized at all; other times, it is, but its presence causes objects previously labeled correctly to now be misidentified, such as labeling a couch a chair; yet other times, it is itself identified as a chair.

Failures like these raise serious concerns for the accountability of artificially intelligent agents: Just imagine what a misclassification of this sort could mean for an autonomous car. Compounding this problem is that we can’t just ask the AI why it came up with a particular classification – where humans can appeal to domain-specific knowledge, such as that a cheetah is a four-legged predatory spotted feline and not a collection of random pixels, a neural network only has its classification result to appeal to. To it, the random noise looked, inscrutably, ‘cheetah-like’.

Failures like these raise serious concerns for the accountability of artificially intelligent agents: Just imagine what a misclassification of this sort could mean for an autonomous car. Compounding this problem is that we can’t just ask the AI why it came up with a particular classification – where humans can appeal to domain-specific knowledge, such as that a cheetah is a four-legged predatory spotted feline and not a collection of random pixels, a neural network only has its classification result to appeal to. To it, the random noise looked, inscrutably, ‘cheetah-like’.

This problem has spurred DARPA to usher in a ‘third wave’ of AI. In this classification, the first wave of AI were so-called ‘expert systems’, that essentially relied on hard-coded expert knowledge to perform their tasks, while the second wave comprises agents relying on neural network-based computation, trained using massive sets of data to accomplish feats of classification.

Third wave AI, on the other hand, will add a crucial capacity that enables an autonomous agent to both justify itself, as well as increase its performance, limiting the incidence of ‘bizarre’ failures. Roughly speaking, a third wave system will possess a model of the objects within its domain; that is, it will be able to refer back to the fact that a cheetah is a four-legged spotted animal in order to prevent misclassifications. In addition, if asked for a reason why it classified a particular picture as that of a cheetah, it can then appeal to that model, answering “because the animal it shows has spots”.

Video reference (added by editor): Lee Sedol’s legendary match up with AI Alpha Go:

This capacity of explaining or justifying itself is crucial to human-style cognition. Psychologists, going back to William James, speak of the ‘dual-process model’ of human thinking: Thought, the model claims, consists of two modes – a fast, implicit, unconscious and automatic facility of recognition, as when you immediately judge the mood of a person by their facial expression, and a slow, deliberate, effortful conscious process of reasoning, as in solving a math puzzle. In his bestselling book ‘Thinking, Fast and Slow’, Daniel Kahnemann calls these different faculties simply ‘System 1’ and ‘System 2’.

Neural networks then excel at System 1-style recognition tasks, but, just as we occasionally have trouble justifying our intuitions, lack the capacity to provide a step-by-step account of how they come to their judgments. DARPA’s third wave initiative thus calls for adding a System 2 to artificial intelligence. As AI researcher Ben Goertzel already put it in 2012:

Bridging the gap between symbolic and subsymbolic representations is a – perhaps the – key obstacle along the path from the present state of AI achievement to human-level artificial general intelligence.

Here, ‘subsymbolic representation’ refers, essentially, to neural network-style computation, as opposed to the symbolic representation of explicit models.

Whereas System 1 produces automatic, fast judgments, System 2 is the deliberate, step-by-step chain of reasoning we are probably most accustomed to simply identify with ‘thought’ – the process by which we derive conclusions from premises, or follow a recipe, or find reasons, often for our own behavior.

Indeed, we think of System 2 as taking primacy in our mental landscape, but much psychological research seems to indicate the opposite: System 2 often acts as an ancilla to System 1, producing retrospective justifications, coming up with reasons why we acted or felt a certain way only after the fact

System 2 allows us to justify ourselves, to produce narratives explaining our behavior. But in doing so, it may go wrong: We often confabulate reasons for our actions in cases where we don’t have introspective access to their true causes. An extreme demonstration of this sort of flawed self-assessment comes from split-brain patients. In such cases, the corpus callosum, the ‘bridge’ between the two brain hemispheres, has been severed – a procedure used, for instance, in the treatment of severe cases of epilepsy. This leads to the possibility of certain pieces of information not being globally available, yet influencing behavior. Thus, the regions of the brain responsible for governing behavior and those generating justifications may become dissociated, one acting on information the other has no access to.

As an example, a split-brain patient may be shown the instruction to ‘walk’ only in the left half of their field of vision. Due to the ‘crossing’ of the optical nerves in the brain, this information will exclusively be available to the right hemisphere. If the patient then gets up and wanders off, they are asked to provide a reason why; however, the speech centers of the brain are housed in the left hemisphere. Consequently, the part of their brain that provides verbal reports has no access to the information that caused them to wander around. Yet, rather than truthfully reporting the fact that they have no idea why, most offer up a confabulated reason – such as, “I wanted to get a coke.”

System 2 is thus not merely an error-correcting device to keep System 1’s free-wheeling associativeness on the straight and narrow, but may over-correct, inventing reasons and justifications where none are available. The models of the world it offers may mislead us, even as they guide our action.

Indeed, to a certain extent, every model must mislead: An orrery is not the solar system, and a picture of a pipe is not a pipe. If there were no difference between the model and the thing modeled, then they would be one and the same; but then, modeling would not accomplish anything. Consequently, however, it follows that our model of the world necessarily differs from the world. The map is not the territory, and we confuse the two at our own peril.

2 Dual-Process Psychology and the Four Noble Truths

In Buddhist thought, the step-by-step reasoning of System 2 is known as ‘discursive thinking’ (Sanskrit: vitarka-vicara, Pāli: vitakka-vicara), which characterizes the first stage of meditation, and is said to be absent in later stages. One often encounters discursive thought as linked to a misapprehension of or illusion about the composition of the world; a thinking about the world that occludes its true nature.

This misperception causes us to experience craving (Sanskrit: tṛ́ṣṇā, Pāli: taṇhā) – what we believe about ourselves and the world causes us to want certain things: Satisfaction of desire, possessions, wealth, status, and so on. These cravings are often frustrated (in part by necessity, because they are caused by a mistaken perception of the world), and it is this frustration that causes us to experience suffering. Even the satisfaction of desire can only lead to more desire, and thus, furthers suffering, rather than overcoming it.

This misperception causes us to experience craving (Sanskrit: tṛ́ṣṇā, Pāli: taṇhā) – what we believe about ourselves and the world causes us to want certain things: Satisfaction of desire, possessions, wealth, status, and so on. These cravings are often frustrated (in part by necessity, because they are caused by a mistaken perception of the world), and it is this frustration that causes us to experience suffering. Even the satisfaction of desire can only lead to more desire, and thus, furthers suffering, rather than overcoming it.

Suffering, in Buddhism, is thus different from things like pain: While pain is a simple reality, whether we suffer is a question of our attitude toward the world. The hopeful message originating from these ideas is then that by changing, by correcting our perception of the world, we can eliminate the causes of suffering.

In a nutshell, these are the first three of the Four Noble Truths, the core teaching of Buddhism. The First Truth acknowledges the reality of suffering, and its unavoidable presence due to the essential unsatisfactoriness of the world as it presents itself in our ordinary perception. The Second Truth locates the origin of suffering with our craving, which in turn is a result of our ignorance about the true nature of the world. The Third Truth draws the conclusion that the end of suffering lies in realizing our ignorance, which goes along with the cessation of craving, essentially exposing it as based on false premises.

Finally, the Fourth Noble Truth concerns the way out of the whole quagmire. In Buddhist tradition, this is called the Noble Eightfold Path. Intriguingly, it’s not so much a collection of teachings, rather than a set of practices: You can’t reason yourself out of your misperception of the world, you can only train yourself out of it.

The dual-process, model-vs-neural-net picture of cognition can be employed to shed some light on these Buddhist ideas. In order to unpack them, we first have to take a closer look at the notion of modeling.

As we have seen, human-style thought differs from neural net-style recognition at least in part due to the presence of semantic, context-appropriate knowledge about the objects of our thoughts. We employ models both to justify and explain ourselves, to ourselves and others. But what is a model?

In his book Life Itself, theoretical biologist Robert Rosen conceived of the notion of modeling as a certain kind of structural correspondence between a model and its object. Take the relation between an orrery and the solar system: The orrery’s little metal beads are arranged in a certain way, and subject to certain constraints that dictate possible configurations. This arrangement mirrors that of the planets around the sun, and the constraints ensure that for every state of the model, there is a corresponding state of the solar system, and vice versa. It does not matter that on the one side, the constraints are implemented via wires and gears, while on the other, they are due to Newton’s law of gravitation: For one system to model another is merely to mirror relationships between parts, not to replicate its inner composition.

Indeed, as noted earlier, if we required that a model equals the system it models in every respect, we would end up with a copy, not with a model. Thus, in order for a model to be a model, it must not merely replicate certain properties of the original, but it must also differ in certain ways. Maps model the territory in that the placement of certain map markers mirrors that of cities, mountains, and lakes; they don’t model it in being composed of earth, water and vegetation. The map’s legend explains what each marker stands for, and is what ultimately allows us to use them for navigation.

Confusion then comes about once we mistake features of the model for features of the system it models. Since we can model the motion of the planets by clockwork, one might conclude that the heavens themselves are animated by a giant celestial clockwork, stars and planets dotted on crystalline spheres revolving around one another. But this is just, as Korzybski memorably put it, mistaking the map for the territory: Just because we can model a system a certain way, doesn’t mean that it actually is that way.

The confusions that Buddhism claims lie at the origin of our suffering can be seen to be of just this kind. However, they are not merely accidental properties of the modeling system that get projected onto the original, but rather, are inherent to the notion of modeling itself.

Buddhism proposes three truths in particular we are systematically mistaken about:

- Impermanence (Sanskrit: anitya, Pāli: anicca): Everything that arises, does so in only a temporary fashion, and dissolves again, locked into a chain of cause and effect (what’s sometimes called conditioned existence)

- Emptiness (Sanskrit: śūnyatā, Pāli: suññatā): Everything is ultimately free of any particular inner character or intrinsic nature (Sanskrit: svabhāva; Pali: sabhāva)

- No-Self (Sanskrit: anātman, Pāli: anattā): There is no fixed, unchanging ‘core-self’; we are ourselves free of inner nature

These are not independent of one another: In order for things to be impermanent, they can’t have a fundamental inner nature – for otherwise, where would that nature go when it changes? If it changes itself, it can’t be immutable, but then, in what sense was it really an inner nature?

Western philosophy, by contrast, starts out with the notion of substance: The fixed, unchanging fundamental stuff, out of which ultimately everything else arises. Thales of Miletus, widely considered to be the ‘first philosopher’ (more likely, simply the first of whose thought a record exists), proclaimed that ‘everything is water’. As first stabs go, this isn’t an altogether bad one: The mutability of water is readily accessible to observation – you cool it, and it solidifies as ice; you heat it, and it becomes a gas. Yet, it manifestly remains, in some sense, the same kind of thing (today, we would say it remains H2O). Why could it not have other forms, that arise under other conditions?

So here we have an example of all phenomena ultimately boiling down to a single kind of thing, which in itself just forms the true, immutable inner character of the world. Of course, while a great number of questions get answered in this way, new ones immediately arise: Why water? And, perhaps more pressingly, whence water? If everything is grounded in water, what grounds water itself?

This has spawned a productive tradition in philosophy. Not long after Thales, Heraclitus came along to proclaim that no, everything is actually fire. Again, not a bad guess, as such: Fire may be brought forth from many things, and almost everything can be ‘dissolved’ into fire, after all. Parmenides took the game of substances to its extreme, and proposed that all change is, in fact, illusory – indeed, as aided by his disciple Zenon of Elea, logically impossible. To Democritus, there were just ‘atoms and the void’, and he is thus often perceived as the progenitor of present day views, where everything is quantum fields, bubbling away in the modern-day void of the quantum vacuum.

Buddhist Goddess of Mercy Robot? (Inserted by Editors — for fun):

3 Fundamental Natures and the Library of Babel

From a Buddhist point of view, all of this is more or less barking up the wrong tree. More accurately, such views are elements of conventional (as opposed to ultimate) truth. That is, they’re not false, and certainly far from worthless – as a physicist by training, I’m far from suggesting that anybody who studies quantum fields is wasting their lives, or caught up in some intricate illusion.

But conventional truth is the truth of models, of discursive thought, and we must take care not to confuse our models with the world. And it is, in fact, a characteristic of models that they boil down to a certain fundamental nature, a set of facts from which everything else can be derived, but which themselves admit no further justification. As an analogy, take axiomatic systems in mathematics: Everything that can be proved within such a system flows logically from the axioms. But why those axioms?

The reason for this ‘fundamentalism’ found within every model is, perhaps counter-intuitively, their incomplete, partial nature. It’s an intriguing fact from information theory that a part can contain more information than the whole it is a part of: While certain wholes admit of a very short, and nevertheless perfectly accurate description – ‘a glass sphere of 10 cm diameter’ perfectly and exhaustively describes said object – parts of it may take much more information to completely characterize. Just think of dropping the sphere, and attempting to describe a single shard in complete detail: Volumes of text would not suffice.

The same goes, for instance, for mathematical entities: The natural numbers are completely characterized by a brief list of statements (the so-called Peano axioms), but specifying any subset of them may amount to listing all of its members – which might be an infinite list.

This employs a measure of information content that’s called description-length (or Kolmogorov, after the Russian mathematician who came up with it) complexity. My claim is now that every model has a non-zero description-length complexity – but crucially, the world itself may not. If the description-length complexity then measures the ‘fundamental facts’ that specify the model, such as the statement ‘everything is water’, then having a fundamental character is a property of models, but not necessarily of the world they seek to model. The world would then be empty of such a fundamental nature.

Another curious fact about Kolmogorov complexity will help us demonstrate this. The Kolmogorov complexity of a part is always the same as the Kolmogorov complexity of what’s left over after having taken away that part. It’s again like the shard and the glass sphere: If you just break out one shard, then specifying the specific form of the shard also serves to specify the hole it left – and thus, the complete shape of the sphere minus the shard. Or, take a set of numbers, and remove some of them: Once you can describe which ones you’ve taken away, you can also describe which ones are left over.

Now, take everything. If you take nothing away, you’ve still got everything left; and nothing has zero information content. Consequently, everything, itself, must also have zero information content.

Like the shard fitting into the hole it left in the glass sphere to create an object of much less information content than either of its parts, if you keep putting all the ‘parts’ of everything together, what you end up with ultimately has no information content – no fundamental nature – at all. It’s empty.

If that seems a bit suspiciously easy, consider how information content shrinks with each specification, or distinction, we remove. Take Borges’ Library of Babel, which contains every possible book written with an alphabet of 25 characters. In order to fully specify the library’s contents, we’d also have to specify the letters, and, to keep matters finite, we can add an upper limit to the length of the books.

These few words specify an enormous amount of texts. Each single volume within the library is likely to take much more information to specify (excluding oddities, such as a book that only contains the letter ‘a’ repeated a hundred thousand times). Now, consider how we can reduce the number of books by including further specifications: Say, every possible book of the given length written in the given alphabet that starts with the letter ‘q’.

We have increased the total information content of the library – since it now takes a longer description to fully specify it – but simultaneously, we have decreased the total number of books within it. This is due to us adding a single, further distinction: That the books should start with a certain letter.

Conversely, removing distinctions further decreases the total information content, while increasing the library itself – say, we drop our requirement that all books are written using the same 25 characters, allowing arbitrary character sets instead, or we remove the requirement that they be books, allowing all manner of written texts, or any objects at all.

This introduces a limiting procedure: With each removed distinction, the content of the library gets less stringently specified, and thus, the number of elements it contains grows. Taken to its logical conclusion, we are left with a ‘library’ containing every object, while having no information content whatsoever – since if there were any information content left, we could erase that final distinction, adding the objects previously excluded by it to our ‘library’.

This ties into Buddhist teachings in two further ways. The world, taken as a whole, contains no distinctions – as for each distinction, it would acquire information content, and consequently, a fundamental nature. This harkens back to the non-dichotomic nature of reality in Buddhist thought: There are no fundamental distinctions between self and other, or between the things within the world (after all, there are also no fundamental natures upon which these distinctions could supervene).

Furthermore, individual objects arise from the world by drawing a distinction – the distinction between the shard and the rest of the glass sphere, say. Just as, in art, with the figure, there arises the ground, phenomena arise in mutual interdependence. This leads to the doctrine of dependent origination (Sanskrit: pratītyasamutpāda, Pāli: paṭiccasamuppāda), which states that nothing exists independently, of its own power, so to speak – in contrast to the Western notion of substance, which exists purely out of its own accord.

The above should be read in the spirit of explanatory metaphor, as is often offered in Buddhist texts. Compare the following passage from the famous “Sheaves of Reeds”-discourse:

It is as if two sheaves of reeds were to stand leaning against one another. (…) If one were to pull away one of those sheaves of reeds, the other would fall; if one were to pull away the other, the first one would fall.

That is, the characteristics (whether it falls, or is held up) of each sheaf dependently arise with those of the other. Only by taking some part away is the character of what is left over made manifest – as it is with the information content of a partial system.

On this view, the fundamental nature we see in the world is thus a consequence of the limited grasp of our models, and hence, their non-zero information content. We can think of the shortest description of a given model as its axioms, that suffice to derive every further true fact within the model; these axioms (like ‘everything is water’) then characterize the world according to the model.

4 The Homuncular Self

On first blush, it is pure expedience that limits the scope of our models. It is much more useful to model my immediate surroundings, than to model the entire universe as a whole. However, there are in fact intrinsic limitations to modeling, which ensure that every model can only ever be partial. Thus, all model-based reasoning by necessity implicitly views the world as having a certain sort of fundamental nature.

One such limitation of modeling applies to modeling itself. How do we use, say, an orrery to model the solar system? Or a map to model the terrain we plan on exploring? By taking configurations of the orrery, and converting them, somehow, into planetary arrangements. This makes use of an assignment of parts of the orrery to parts of the solar system. For a map, this assignment is given by its legend: A triangle denotes a mountain top, green colored areas are forests, blue patches lakes.

But, the legend on the map is, first and foremost, just a set of marks, physical objects, on paper. How do we know the meaning of these marks? Well, again: We need some sort of association of these marks with what they mean. This defines, then, a sort of higher-order legend, used to explain the former. But do we then need another, third-order legend to explain this one? And a fourth, and so forth?

We’ve run into what’s known as the homunculus problem in the philosophy of mind: Directing a capacity at itself to form an explanation of itself collapses into infinite regress. This is most often encountered in the theory of vision: The idea here is that in order to see, we generate an internal representation of our external world. But who beholds this internal representation? And does this mysterious agency – the homunculus or ‘little man’ – need to form its own internal representation for its homunculus to see, and so on?

The capacity of modeling thus collapses at this point, and must either spiral into infinity, or ‘paper over’ the lacuna in its picture of the world with some unanalyzable placeholder proclaiming ‘here there be dragons’. Modeling must always at least miss that one point where the world maps onto itself. We can think of this point as follows. Consider an island. Put a map of that island somewhere onto the island itself. If the map is sufficiently detailed, then it must contain a representation of itself within itself, and within that representation another, and so on.

No matter to what level we model the regress, it will always seem to us as if the symbolic representation is just understood. If we think of the map, we understand it to refer to mountains, forests, cities and roads, rather than lines on paper. If we become conscious of the fact that we need to use some form of translation to interpret the map – if we have to consult the legend – then that legend, in turn, seems immediately comprehended. Asking ourselves how we understand the legend, the language it’s written in just seems intelligible. And so forth: At each level of the hierarchy, the representation seems already understood by a detached observer lurking just beyond the model, at the next higher rung.

This fixed point, rather than being represented in all its dizzying infinitude, gets simply labeled ‘the self’. The self is then just the homunculus using the map to navigate the world, filling the gap left by what would otherwise be an infinite tower of representations. It forms our own, mysterious core; something ineffable (i. e. inaccessible to model-based reasoning) that nevertheless sits at our very center, that, as the central authority, seems to be the thing which all the other stuff is for. It’s the little arrow on the map, labeled ‘you are here’.

Models, by necessity, thus contain a fundamental, unchanging core (the ‘axioms’), as well as a ‘little man’ sitting at the center, beholding the model, using it to apprehend the world (the ‘self’). These arise, as Buddhist thought proclaims, in mutual dependence: Exactly because there is an unanalyzable core self within each model, the model itself is incomplete, and hence, contains a non-zero amount of fundamental information. The dichotomy between subject and object, between self and other is thus likewise a necessary consequence of model-based reasoning, and our belief that this dualism is fundamental to the world again a case of confusing map and territory.

As long as we take model-based thinking for a faithful representation of the world, we will thus consider the world to have an immutable fundamental character, and ourselves as likewise having a definite inner self. Thus confused about the true nature of the world, we become bound up in our cravings, which, due to the unsatisfactory nature of the world, leads to suffering.

It wouldn’t do, however, to merely consider the self an illusion. After all, that point where the map maps onto itself really exists – it’s just that its existence depends on the map, not on the territory it represents. It is an element of conventional truth, but truth all the same, as long as we don’t take it to be an element of the world as such. With all reasoning however based on models, this seems impossible: The self is baked into all such reasoning, so we can’t reason our way out of it. The ignorance that’s at the root of suffering in Buddhist thought thus can’t simply be cured by getting told how things actually are – as ignorance about the name of the third highest mountain in the world can be (Kangchenjunga, if you were wondering).

So, what’s the way out? The solution, at first glance, seems simple: Throw the models overboard, and instead, trust System 1. Go with your gut! Follow your instincts! Let your inner neural net do all the work!

However, this seems unlikely to work. If I go with my gut, I’ll eat all of the chocolate now, and feel bad later when I look at the scales. System 1 is often concerned with immediate satisfaction, as opposed to long-term planning.

5 Meditation as a Different Kind of ‘Deep Learning’

But Buddhist thought is more subtle than that. We have, through past actions informed by a misperception of the world, trained our System 1 to give in to cravings. We have accumulated bad karma: Selfish past actions have trained us to seek instant gratification. This training, I suggest, can be understood in exactly the same way as that of a neural network: Fed a large enough amount of data, it tunes itself to the regularities in that data, and emulates it – this is how neural networks accomplish the impressive feats they are capable of. However, depending on the training data, this can lead to results that range from the hilarious to the offensive, as with Microsoft’s Twitter bot Tay, which had to be shut down after starting to spout hateful, racist rhetoric. Bad training data thus leads to bad behavioral outcomes. Training System 1 with data skewed by System 2’s misapprehension of the world leads to maladjusted behavior – ignorance leads to suffering.

Following these ingrained habits further is unlikely to lead to liberation from suffering – in fact, it will accomplish just the opposite. The solution, in Buddhist tradition, is thus to re-train our inner neural networks: To feed them better data, in order to enable them to make better decisions not governed by the false perception of the world.

This training is at the core of the Noble Eightfold Path. Let’s take a brief look at two methods of training in particular: Meditation practice, and the study of kōans that’s integral to Zen Buddhism.

Emphasizing practice over theory is not, in itself, a particularly shocking approach. You can’t learn to play the guitar by reading a book on guitar-playing; you actually have to practice playing the guitar, repeatedly, until you hopefully improve. The transmission of abilities thus isn’t accomplished by the mere transmission of knowledge – there are some things books can’t teach, but can only tell you how to learn.

But let’s take a look at how learning works, in this case. You’re given a set of instructions to follow – a model, in other words. Through diligent practice, the instructions become ‘ingrained’, until you reach a point where you don’t have to actively contemplate what you’re doing anymore, but simply act. The seasoned guitar player does not have to think about where to put her fingers to strike a certain chord, nor does she need to pay much mind to her strumming.

By practicing instructions given to you in the form of a model, going through it System 2-style step-by-step, you can eventually transfer an ability into the domain of System 1, until it becomes wholly automatic and effortless.

Such model-based training is one of the features of future third wave AI systems. Where a second wave (neural net) AI might need data sets with thousands of examples to perform even comparatively simple tasks, such as handwriting recognition, reliably, if augmented by a model of how different characters are produced, comparable performance may be achieved with much smaller sets of data.

The intention of meditative practice is then to re-train the mind – to undo the training System 1 has received by years of model-based reasoning, accepting the world as having a permanent fundamental nature and the reality of the self. Meditation thus includes an effort to silence discursive thinking. One road towards this is by learning how to focus attention, rather than letting it wander around aimlessly, and so, gradually, build up an awareness of our mental content.

This sort of training can perhaps be compared to becoming a seasoned oenophile, a wine connoisseur: By repeatedly studying an experience under controlled circumstances, such as the tasting of wine, one develops an appreciation for its more subtle aspects, as System 1 accumulates training data allowing it to draw ever finer distinctions. Thus, a skilled meditator has become a connoisseur of their own mind, capable of more fully appreciating the processes of modeling going on at any time, and thus, recognizing them as separate from merely appreciating the world as it is.

The shocking part then is this. We can break out of our misperception of the world not merely by acquiring the right sort of knowledge – reading the right sort of books, listening to the right teachers. No matter what is transported in this way, it will contribute to model-based thinking, and keep us mired in conventional truth.

Rather, meeting the world in the right way involves the right sort of practice – doing things the right way, not merely knowing the right sorts of things. To a physicist, this is both familiar and deeply disorienting: The mathematics necessary to grasp modern physical theories can’t be mastered merely by reading math books, but must be practiced diligently, until the abstract concepts have become innate. This is, I believe, what the great polymath John von Neumann meant when he said, “In mathematics, you don’t understand things. You just get used to them.”

But mathematics is merely a tool for model-building. It enables the student to learn the models that describe the physical world, in all its gory details. If the above is right, however, there is no such model. Rather, to meet the world free from illusion means to bypass models, and embrace training and diligent practice. The goal is thus not to produce an explicit understanding of the world as a whole – that is futile – but, more modestly, to shake oneself free from the errors introduced in misrepresenting the world.

This goal is also pursued in kōan practice. Take what’s perhaps the most (in-)famous example, due to the great Zen master Hakuin Ekaku:

“Two hands clap and there is a sound. What is the sound of one hand?”

One can read this as first introducing a model, and then unmaking it. A sound originates in the meeting of both hands, as the impact sends acoustic shock waves through the air. But with only one hand, no impact can occur, and hence, no sound is produced. This takes model-based reasoning to its limits.

However, a neural network would not necessarily have the same problem. It does not care about the underlying model. Indeed, stories produced by neural networks often have a certain kōan-like quality to them, since they lack internal coherence. No model fits the story, just as no model fits the kōan. Thus, a kōan can introduce training data to System 1, without presenting System 2 with anything it can make sense of, helping it shake itself free from the influence of model-based misperceptions. The kōan points beyond itself, providing food not for the model-based, conventional truth engine of System 2, but directly interacting with System 1, training it to overcome the years of mistaken impressions of the world.

This speaks to the interlocking nature of conventional and ultimate truth in Buddhist analysis. We can’t break free of our misperception by merely using conventional reasoning alone; yet, conceptual thought is needed to realize its own inadequacy. Consequently, this essay can’t do any more than provide you with a model – which, if it is to have any meaning at all, must, like the kōan, or the finger directed towards the moon, point beyond itself, indicating that which can’t be said directly. Like Wittgenstein’s ladder, it can only instruct in so far as indicating that it must be overcome. As the great Buddhist philosopher Nāgārjuna put it, “whoever makes a philosophical view out of emptiness is indeed lost” – in the memorable image of Nāgārjuna’s commentator Candrakīrti, it would be as if, upon being told by a shopkeeper that they have nothing to sell, one then asked to be sold that nothing.

Conventional and ultimate truth are themselves dependently originated: It’s only because models are always incomplete that their distinction arises. Ultimately, (the doctrine of) emptiness is itself empty.